Silhouette Stability Research

There are two main types of algorithms for extracting silhouettes: image space and objects. Image space extractions were the first method of extracting silhouettes. Image space extractions usually perform two renderings; the first extracts the silhouette lines, and the second creates the final image. Object space extractions use geometric properties of the silhouette to identify the silhouette and contour lines. Object space extractions offer more flexibility for modeling and but are often more costly than image space algorithms.

The research with silhouettes that I am currently working on (with a great deal of assistance from Dr Faramarz Samavati and Dr Mario Costa Sousa) is on extending a object space silhouette extraction data structure, the edge buffer, to achieve temporal coherence as silhouettes are animated. To do this we find a stability measurement for the faces and edges of the mesh.

We are very excited over this stability measurement as it definitely improves the temporal coherence of silhouettes. We have also found this measurment very useful for applying several non-photorealistic rendering styles. This is an exciting NPR result as our method does not rely on chaining silhouette edges (often an issue in other works). In our renderings we can vary the amount of edges we wish to include in our rendering.

This work has been published at the Spring Conference on Computer Graphics in Budmerice, Slovakia this April (2004). A copy of the paper can be found here.

Our application used to produce these results is available here.

We are currently working on expanding this work into a journal publication.

Below are movies that display our system's temporal coherence.

(Sorry, they're not in synch!)

An animation of a conventation object-space silhouette.

An animation of a conventation object-space silhouette. An animation of a silhouette rendered by our system.

An animation of a silhouette rendered by our system.The following figures show various results of this research:

A biplane, the shading is based on our stability measurement.

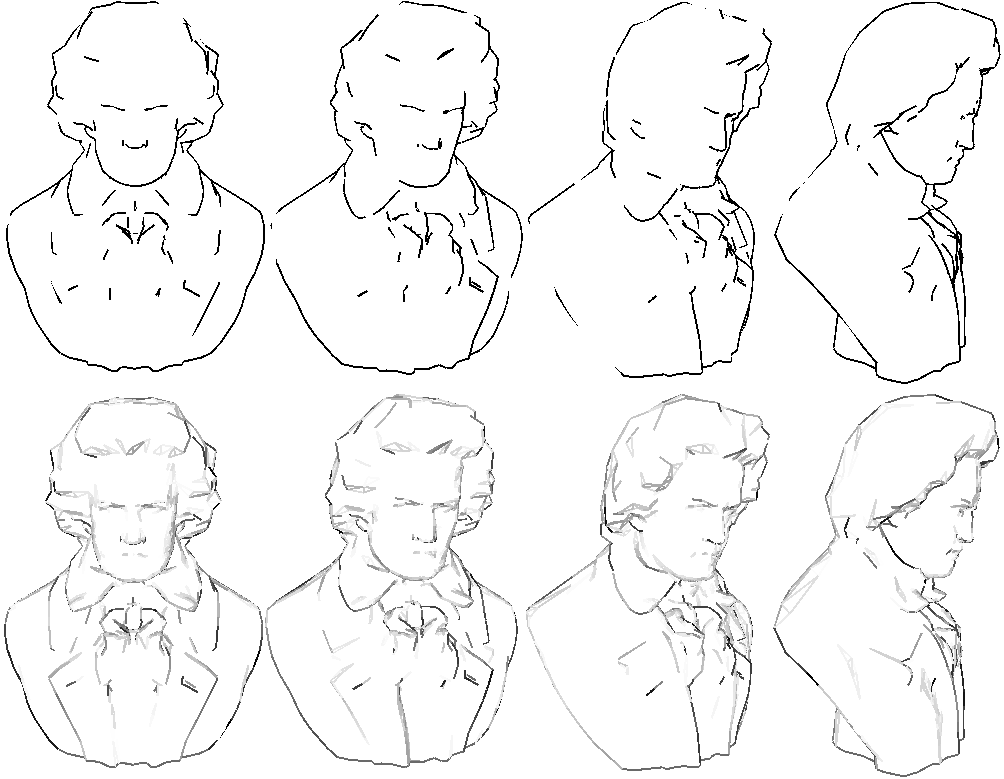

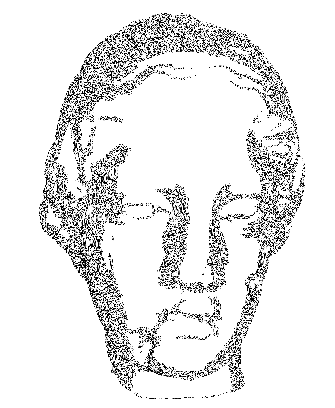

The top row of this figure shows the beethoven model being rotated with a standard silhouette extraction. The bottom row shows the silhouette with our local stability rendering.

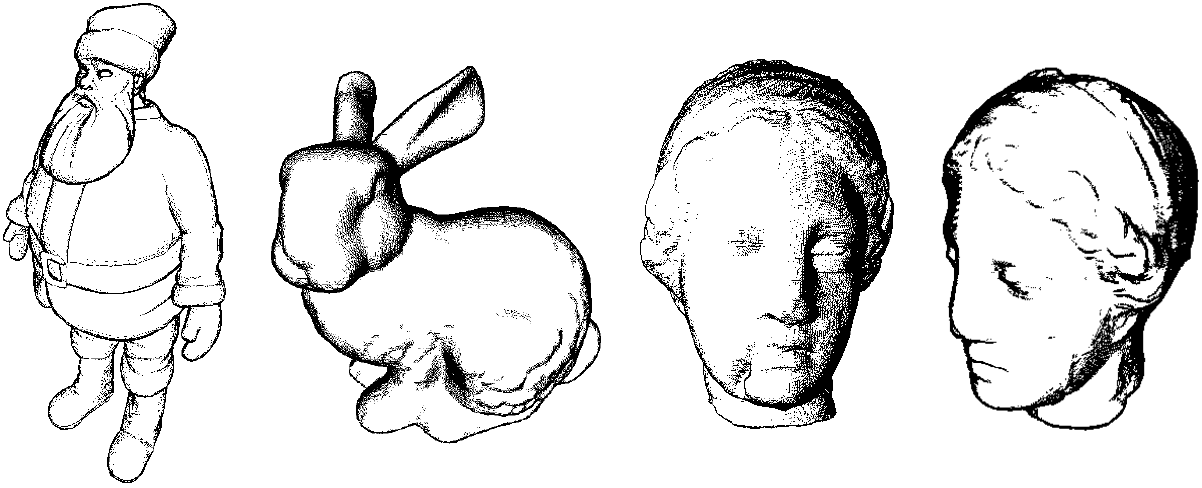

Several models rendered using precise ink strokes.

A locally shaded sun.

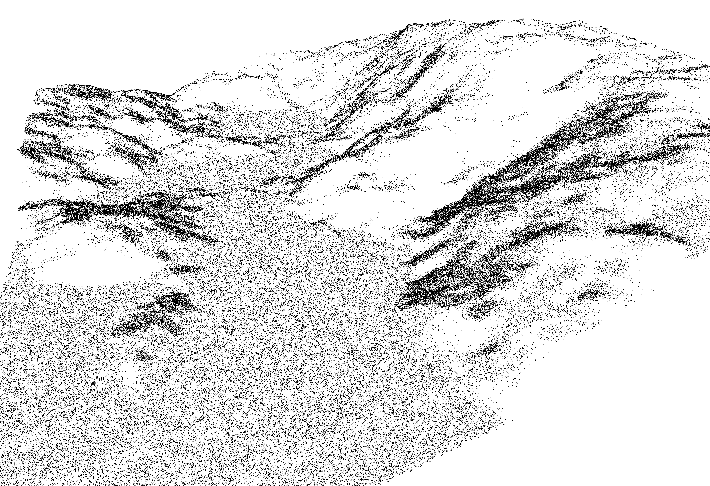

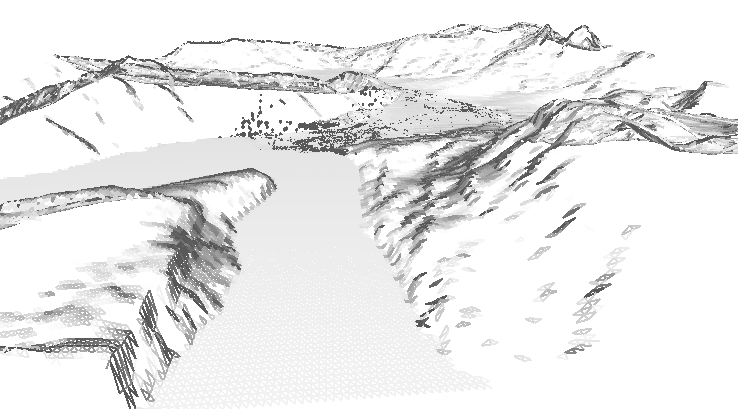

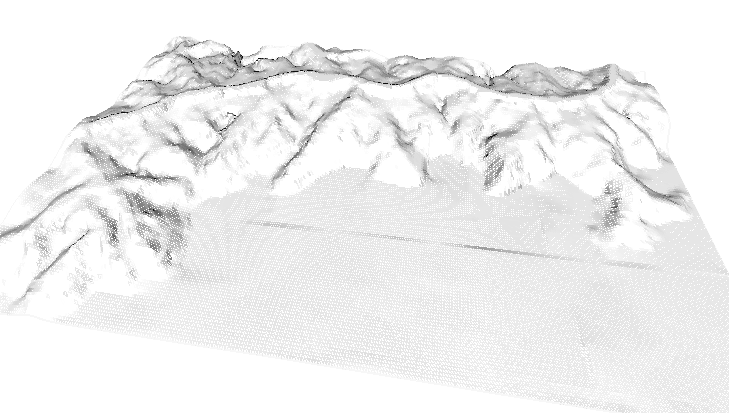

A terrain rendered by applying ink textures. The particular ink texture chosen for a face of the mesh is based upon stability.

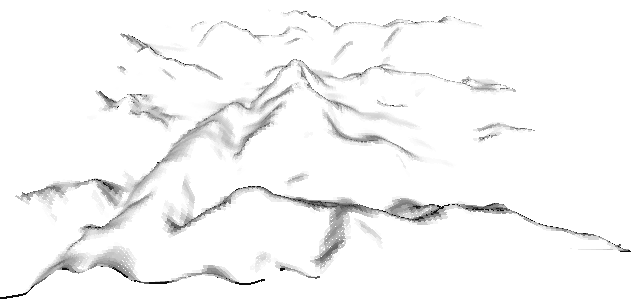

A local stability shaded terrain.

A local stability shaded terrain.

A rendering of a wolf with shaded faces (based upon our stability measure). The silhouette has been fixed and the model rotated.

The Igea artifact model rendered with ink textures.

A local stability shaded terrain.

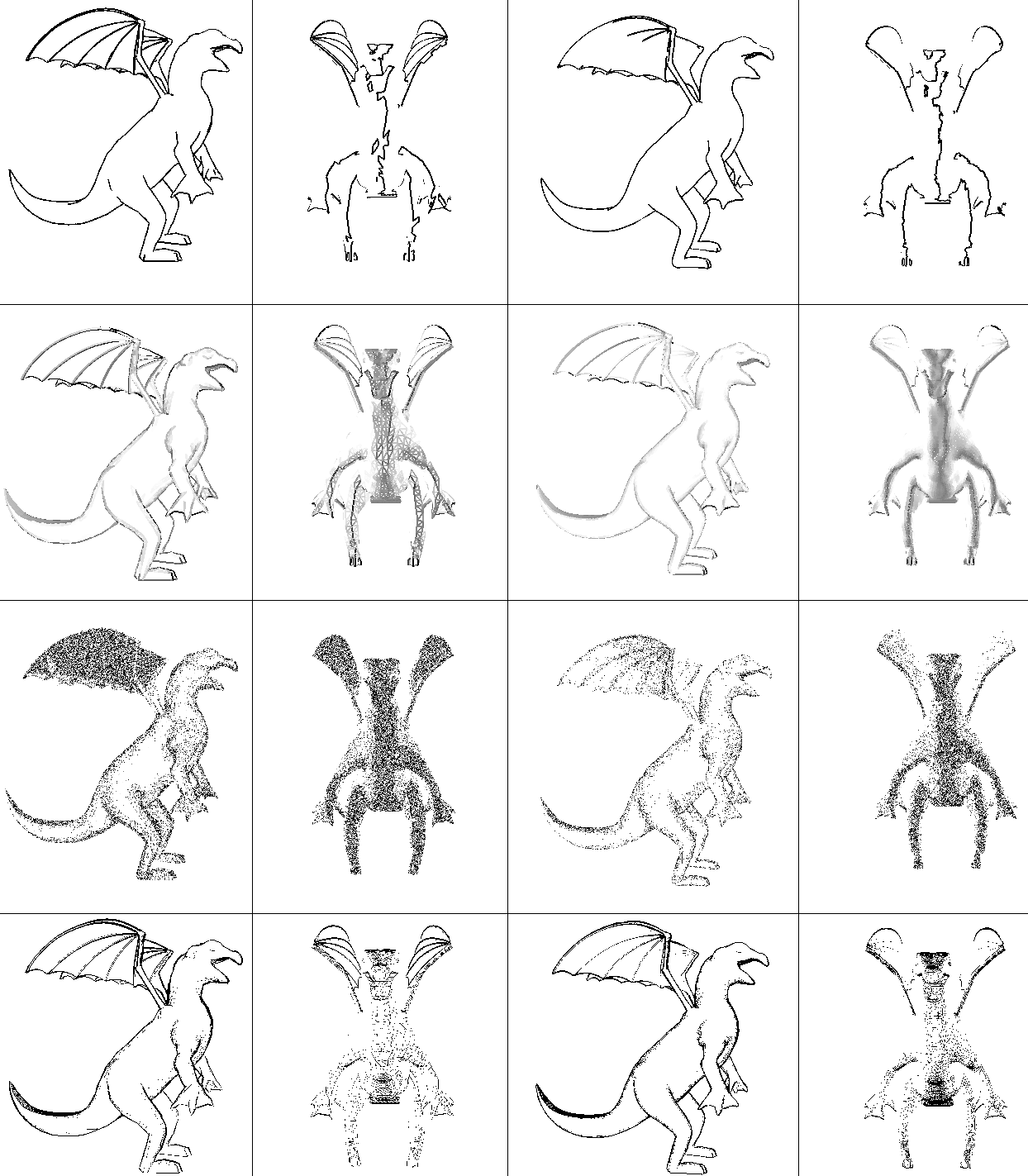

Each row of this figure shows several of the rendering styles we have experimented with on a single model. The first column shows the original model, the second shows the original model with the silhouette fixed, but the model rotated. The third column show a subdivided model and the fourth again shows the result of fixing the silhouette and rotating the model. The top row shows a conventional silhouette rendering, the next row shows our stability shading, the row after that shows ink tone texture mapping, and the bottom row shows ink stroke renderings.